Inference Scaling of ML deployments

- Project

- 20219 IML4E

- Type

- New service

- Description

Optimizing the performance of machine learning (ML) models typically focuses on intrinsic factors, such as the number of neurons, depth of layers, or adjusting the numerical precision of computations. Such approaches inherently involve modifying a model’s internal architecture, attributes and parameters, which can lead to trade-offs in accuracy. Alternative avenues of performance optimization can also be explored. This experiment framework focuses on evaluating REST and gRPC protocols as the two widely supported protocols across various model-serving frameworks.

- Contact

- Dennis Muiruri

- dennis.muiruri@helsinki.fi

- Research area(s)

- Serving, performance

- Technical features

The experiment framework provides a way to evaluate the following aspects of a model deployment.

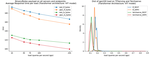

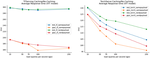

Performance of supported inference protocols: Performance differences between endpoints can be attributed to the underlying data exchange protocols for a given payload type. gRPC outperforms REST as seen by lower latencies across evaluated serving frameworks. The payload type matters due to serialization overhead.

Evaluation of Serving framework performance: Some frameworks can be deemed more appropriate for production than others based on factors such as their performance profiles. Models implemented using TorchServe achieved better performance compared to Tensorflow Serving after controlling for differences in payload types.

Caching effects: Model serving frameworks can have different caching effects. Stronger caching effect was shown in TorchServe, compared to TensorFlow Serving.

Scalability under load: How serving frameworks scale inference under increased load. CPU utilization is better under higher loads especially in a multicore setting. The gRPC protocol is natively asynchronous, therefore the gRPC endpoint delivers a higher load to the server compared to natively synchronous REST endpoint. gRPC therefore can provide better performance with lower engineering overhead.

- Integration constraints

None

- Targeted customer(s)

ML engineers involved in deployment, System Architects, Cloud service providers, AI/ML consulting firms.

- Conditions for reuse

Open access.

- Confidentiality

- Public

- Publication date

- 25-09-2024

- Involved partners

- University of Helsinki (FIN)

Images