Adversarial Test Toolbox

- Project

- 20219 IML4E

- Type

- New library

- Description

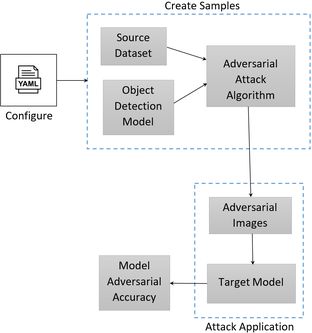

The Adversarial Test Toolbox is a Python-based tool that enables users to assess the robustness of target models against adversarial attacks in white-box (attacks created/ applied on the same model) and black-box (samples transferred from other models) settings.

- Contact

- Jürgen Großmann, Fraunhofer FOKUS

- juergen.grossmann@fokus.fraunhofer.de

- Research area(s)

- ML model robustness.

- Technical features

The tool enables users to define models, data, and adversarial attack algorithms to generate adversarial examples. The generated samples can then be applied to target models to perform a black-box attack. In its current state, the tool supports object detection datasets like COCO and TTPLA, along with models such as YOLO3 and YOLO5 for creating and transferring adversarial samples. Users can also choose from various algorithms like FGSM and PGD to create adversarial examples.

- Integration constraints

Windows/UNIX-based OS with Python (3.10.8) and PyTorch 2.2.1

- Targeted customer(s)

Companies developing AI applications.

Academic research institutions focusing on adversarial attacks and defense mechanisms.- Conditions for reuse

License information on request.

- Confidentiality

- Public

- Publication date

- 06-09-2024

- Involved partners

- Fraunhofer (DEU)